Introduction

In this article I am going to go into detail about how .NET treats loops involving array and collection access and what kinds of optimizations you can expect. I had mentioned some of these in a previous article, but in this article, we’re going to go deep and see many more examples. We’re going to dig into the IL that gets generated as well the x64 assembly code that actually executes.

Will these optimization matter to your program? Only if your program is CPU bound and collection iteration is a core part of your processing. As you’ll see, there are ways you can hurt your performance if you’re not careful, but that only matters if it was a significant part of your program to begin with. My philosophy is this: most people never need to know this, but if you do, then understanding every layer of the system is important so that you can make intelligent choices. That’s the reason I wrote my book, Writing High-Performance .NET Code, and why I write these articles—to give you the information about what’s actually going on when you compile and execute a .NET program.

Some of this information you can read elsewhere, but I want to show you how to get at this yourself so that you don’t have to rely on someone publically coming out with this information, especially as new version of the JIT are released.

Tools I used: ILSpy to view IL, Visual Studio 2013 to debug and view assembly with .NET 4.5. I compiled the code in Release mode, targeting x64 explicitly. When debugging, don’t start with debugging from Visual Studio–that will not give you an accurate picture of your code. Instead, start the program separately and attach the debugger. I accomplish this easily by putting a call to Debugger.Launch() in the beginning of Main(), and then executing the program with Ctrl-F5.

A note on assembly. I chose x64 because it should be the default for development these days. For register names mentioned below, understand that things like eax and rax refer to the same register–rax is the 64-bit version of this register, and is essentially a sign-extended version of eax, but they both refer to the same physical location. Same with ecx/rdx, ecx/rcx, etc.

Bounds Checks

Bounds checks exist in .NET because the CLR must ensure memory safety at all times in managed code. It needs to simply be impossible for managed code to corrupt memory outside of the defined data structures. This means that code like the following cannot be allowed to execute:

int[] array = new int[100];

array[100] = 1;

Attempting to execute this should result in an exception rather than allow the memory corruption to occur. That means that the CLR has to inject bounds checking into array access. This can make repeated array access twice as slow if some optimizations aren’t done. This knowledge makes some people leery of using .NET for high-performance code, but we’ll see that the JIT compiler does indeed make these optimizations in some cases (and falls short in others). However, remember what I said in the introduction: these kinds of optimizations only matter if you’re CPU bound and this code is in your innermost, most called-upon code. That said, even if you don’t have to worry about performance on this level, knowing how to investigate these types of details is instructive.

Baseline Loop

To start, let’s take a look at the most basic of loops:

[MethodImpl(MethodImplOptions.NoInlining)]

private static long GetSum(int[] array)

{

long sum = 0;

for (int i =0; i < array.Length; i++)

{

sum += array[i];

}

return sum;

}

It simply loops through the entire array and sums all the elements. I’m explicitly disabling inlining because that can muddy the analysis a bit, and we want to focus strictly on loop optimization, not other improvements that the JIT compiler can and will do here.

In IL, this method looks like this (with my own annotations):

.method private hidebysig static

int64 GetSum (

int32[] 'array'

) cil managed noinlining

{

// Method begins at RVA 0x20a4

// Code size 26 (0x1a)

.maxstack 3

.locals init (

[0] int64 sum,

[1] int32 i

)

IL_0000: ldc.i4.0 // Push 0 onto evaluation stack as a 4-byte integer

IL_0001: stloc.0 // Pop from evaluation stack and store into local variable at location 0, sum.

IL_0002: ldc.i4.0 // Push 0 to evaluation stack

IL_0003: stloc.1 // Pop and store in local variable i.

IL_0004: br.s IL_0012 // Jump to IL_0012 (unconditionally)

// loop start (head: IL_0010)

IL_0006: ldloc.0 // Push sum onto stack

IL_0007: ldarg.0 // Push array onto stack

IL_0008: ldloc.1 // Push i onto stack

IL_0009: ldelem.i4 // Pop array and i off the stack and push array[i] onto the stack

IL_000a: add // Pop array[i] and sum off the stack, add, and push result to stack

IL_000b: stloc.0 // Pop result off stack and store in sum

IL_000c: ldloc.1 // Push i onto stack

IL_000d: ldc.i4.1 // Push 1 onto stack

IL_000e: add // Pop i and 1 off of stack, add, and push result to stack

IL_000f: stloc.1 // Pop result off stack and store in i

IL_0010: ldloc.1 // Push i onto stack

IL_0011: ldarg.0 // Push array onto stack

IL_0012: ldlen // Pop array off of stack, get length, and push length onto stack

IL_0013: conv.i4 // Convert length to 4-byte integer

IL_0014: blt.s IL_0006 // Pop i and length off of stack. If i < length, jump to IL_0006

// end loop

IL_0016: ldloc.0 // Push sum onto stack

IL_0017: ret // Return

} // end of method Program::GetSum

That looks like an awful lot of work to execute a loop. IL is a simple, stack-based language that ends up being quite verbose, but this gets translated by the JIT compiler into real machine code that takes advantage of efficient CPU instructions and registers. The resulting assembly is this:

00007FFADFCC02D0 mov rdx,rcx // Copy array address to rdx

00007FFADFCC02D3 xor ecx,ecx // Set ecx (sum) to 0

00007FFADFCC02D5 mov r10,qword ptr [rdx+8] // Copy array length to r10

00007FFADFCC02D9 test r10d,r10d // See if length is 0

00007FFADFCC02DC jle 00007FFADFCC0303 // If so, skip the loop

00007FFADFCC02DE mov r8d,ecx // Copy 0 to r8 (source of ecx is not relevant)

00007FFADFCC02E1 xor r9d,r9d // Set r9 to 0 (memory offset into array)

00007FFADFCC02E4 nop word ptr [rax+rax] // NOP

00007FFADFCC02F0 mov eax,dword ptr [rdx+r9+10h] // Copy array[i] to eax

00007FFADFCC02F5 add ecx,eax // Add value to sum

00007FFADFCC02F7 inc r8d // i++

00007FFADFCC02FA add r9,4 // Increment memory address by 4 bytes

00007FFADFCC02FE cmp r8d,r10d // i < length ?

00007FFADFCC0301 jl 00007FFADFCC02F0 // If so, loop back a few statements for another iteration

00007FFADFCC0303 mov eax,ecx // Copy sum to return register

00007FFADFCC0305 ret // Return

There are a few things to notice here:

- The code is tracking both the index value

i as well as the direct memory address of the array values, which is far more efficient than doing a multiplication and offset on every loop iteration.

- There are no array bounds checks! This is sometimes a worry with people new to .NET—how can we maintain memory safety without boundary checks? In this case, the JIT compiler determined that the boundary checks weren’t necessary because it knew all of the constraints of this loop.

Bringing Back the Bounds Check

Now let’s introduce some variability. If instead, we pass in some boundaries to iterate through, does this change the situation?

[MethodImpl(MethodImplOptions.NoInlining)]

private static int GetSum(int[] array, int start, int end)

{

int sum = 0;

for (int i = start; i < end; i++)

{

sum += array[i];

}

return sum;

}

.

.

.

// Called with:

GetSum(array, 25, 75);

The IL differences aren’t very interesting so I won’t show it, but the machine instructions definitely change:

00007FFADFCC0320 sub rsp,28h // Adjust stack pointer

00007FFADFCC0324 mov r9,rcx // Copy array address to r9

00007FFADFCC0327 xor ecx,ecx // Set ecx (sum) to 0

00007FFADFCC0329 cmp edx,r8d // edx has i in it, initialized with start (25). Compare that to r8, which has the end value (75)

00007FFADFCC032C jge 00007FFADFCC0348 // If start >= end, skip loop.

00007FFADFCC032E mov r10,qword ptr [r9+8] // Copy array length to r10

00007FFADFCC0332 movsxd rax,edx // Copy start to rax

00007FFADFCC0335 cmp rax,r10 // Compare i to array length

00007FFADFCC0338 jae 00007FFADFCC0350 // If i >= length, jump to this address (below)

00007FFADFCC033A mov eax,dword ptr [r9+rax*4+10h] // Copy array[i] to eax

00007FFADFCC033F add ecx,eax // sum += array[i]

00007FFADFCC0341 inc edx // i++

00007FFADFCC0343 cmp edx,r8d // Compare i to end value

00007FFADFCC0346 jl 00007FFADFCC0332 // If i < jump to beginning of loop (above)

00007FFADFCC0348 mov eax,ecx // Copy sum to return register

00007FFADFCC034A add rsp,28h // Fix up stack pointer

00007FFADFCC034E ret // Return

00007FFADFCC0350 call 00007FFB3F7E6590 // Throw an exception!

The most significant difference is that now on every single iteration of the loop, it’s comparing the array index to the length of the array, and if it goes over we’ll get an exception. With this change, we’ve doubled the running time of the loop. The bounds check is there.

So why does it do this now? Is it because we’re doing a subarray? That’s easy to test. You can modify the original function at the top of this article to iterate from 25 to 75 and you’ll see that the compiler does not insert a boundary check.

No, the reason for the boundary check in this case is because the compiler can’t prove that the input values are 100% safe.

Ok, then. What about checking the start and end variables before the loop? I suspect that quickly leads to the problem of trying to understand the code. As soon as the constraints are not constant, you have to deal with variable aliasing, side effects, and tracking the usage of each value—and that’s not really practical for a JIT compiler. Sure, in my simple method, maybe it would be easy, but the general case might be extremely difficult if not impossible.

Other Ways of Getting Bounds Checks

Even simple modifications to the indexing variable, that you can logically prove cannot exceed the array boundaries may cause the bounds check to appear:

[MethodImpl(MethodImplOptions.NoInlining)]

private static void Manipulate(int[] array)

{

for (int i=0; i < array.Length; i++)

{

array[i] = array[i / 2];

}

}

There is no way i / 2 can exceed array.Length, but the bounds check is still there:

00007FFADFCE03B0 sub rsp,28h // Adjust stack pointer

00007FFADFCE03B4 mov r8,qword ptr [rcx+8] // Copy array length to r8

00007FFADFCE03B8 test r8d,r8d // Test if length is 0

00007FFADFCE03BB jle 00007FFADFCE03E8 // If 0, skip loop

00007FFADFCE03BD xor r9d,r9d // Set i to 0

00007FFADFCE03C0 xor r10d,r10d // Set destination index to 0

00007FFADFCE03C3 mov eax,r9d // Set eax (source array index) to 0

00007FFADFCE03C6 cdq // sign extends eax into edx (needed for division)

00007FFADFCE03C7 sub eax,edx // eax = eax - edx

00007FFADFCE03C9 sar eax,1 // Shift right (divide by two)

00007FFADFCE03CB movsxd rax,eax // copy 32-bit eax into 64-bit rax and sign extend

00007FFADFCE03CE cmp rax,r8 // Compare source index against array length

00007FFADFCE03D1 jae 00007FFADFCE03F0 // If greater than or equal, jump and throw exception

00007FFADFCE03D3 mov eax,dword ptr [rcx+rax*4+10h] // Copy value from array[i/2] to eax

00007FFADFCE03D7 mov dword ptr [rcx+r10+10h],eax // Copy value from eax to array[i]

00007FFADFCE03DC inc r9d // Increment i

00007FFADFCE03DF add r10,4 // Increment destination address by 4 bytes

00007FFADFCE03E3 cmp r9d,r8d // Compare i against array length

00007FFADFCE03E6 jl 00007FFADFCE03C3 // If less, loop again

00007FFADFCE03E8 add rsp,28h // Adjust stack

00007FFADFCE03EC ret // return

00007FFADFCE03F0 call 00007FFB3F7E6590 // Throw exception

So even though we can prove that it’s safe, the compiler won’t.

There’s another way, that’s a bit more annoying. Remember the very first, baseline example, the one that did NOT have a bounds check. What if we had that exact code, but it operated on a static value rather than argument?

00007FFADFCE0500 mov rax,0A42A235780h

00007FFADFCE050A mov rax,qword ptr [rax]

00007FFADFCE050D movsxd r9,ecx

00007FFADFCE0510 mov r8,qword ptr [rax+8]

00007FFADFCE0514 cmp r9,r8 // bounds check!

00007FFADFCE0517 jae 00007FFADFCE0530

00007FFADFCE0519 mov eax,dword ptr [rax+r9*4+10h]

00007FFADFCE051E add edx,eax

00007FFADFCE0520 inc ecx

00007FFADFCE0522 cmp ecx,r8d

00007FFADFCE0525 jl 00007FFADFCE0500

00007FFADFCE0527 mov eax,edx

00007FFADFCE0529 add rsp,28h

00007FFADFCE052D ret

00007FFADFCE0530 call 00007FFB3F7E6590

The bounds check is there.

Ok, so what if we’re clever and we pass the static array to our baseline method, which doesn’t know it’s static? There is no bounds check in this case–it’s exactly the same as the baseline case.

Another case where the JIT fails to optimize where it could is iterating an array backwards:

[MethodImpl(MethodImplOptions.NoInlining)]

private static int GetBackwardsSum(int[] array)

{

int sum = 0;

for (int i = array.Length - 1; i >= 0; i--)

{

sum += array[i];

}

return sum;

}

Sad, but true–as much as this looks like the baseline example, it does not have the optimization.

Finally, consider the case of repeated access:

for (int i = 2;i < array.Length - 1; i++)

{

int x = array[i-2];

int y = array[i-2];

}

In this case, the compiler will emit a single bounds check that suffices for both accesses.

Using fixed to Eliminate Bounds Checks

Another way to eliminate bounds checks is to use the fixed keyword. I don’t really recommend it because it has safety and security considerations that you should definitely consider. However, if you really have a need for optimal array access with some nontrivial access pattern that would otherwise trigger a bounds check, this may work for you. In order to use fixed, you must enable unsafe code for your project.

Here are two options for using fixed to iterate through an array:

[MethodImpl(MethodImplOptions.NoInlining)]

unsafe private static int GetBackwardsSumFixedSubscript(int[] array)

{

int sum = 0;

fixed (int* p = array)

{

for (int i = array.Length - 1; i >= 0; i--)

{

sum += p[i];

}

}

return sum;

}

[MethodImpl(MethodImplOptions.NoInlining)]

unsafe private static int GetBackwardsSumFixedPointer(int[] array)

{

int sum = 0;

fixed (int* p = &array[array.Length - 1])

{

int* v = p;

for (int i = array.Length - 1; i >= 0; i--)

{

sum += *v;

v--;

}

}

return sum;

}

Either one will work, but keep in mind that unsafe really means unsafe! You can trample your memory in this method. I recommend keeping all unsafe code segregated to its own module, class, or otherwise limited scope and that it receives extra scrutiny during code reviews. You may also have to deal with organizational security issues with deploying unsafe code. If you can eliminate the bounds checking with pure managed code, strongly prefer that approach instead.

Foreach vs. For

Now, let’s shift our focus up one level of abstraction. In .NET, you can iterate over most collections with the foreach keyword. foreach is in most cases syntactic sugar for calling methods like GetEnumerator(), MoveNext(), and so forth. In this respect, it would be fair to assume that foreach has worse performance overall than a simple for or while loop.

However, that’s not quite the case. In some instances, the compiler will recognize a simple for loop on an array for what it is, regardless of the syntax used.

Foreach on Arrays

If we take our baseline loop from above and convert it to foreach, like this:

private static int GetSumForeach(int[] array)

{

int sum = 0;

foreach(var val in array)

{

sum += val;

}

return sum;

}

What is the generated IL in this case?

.method private hidebysig static

int32 GetSumForeach (

int32[] 'array'

) cil managed

{

// Method begins at RVA 0x2098

// Code size 28 (0x1c)

.maxstack 2

.locals init (

[0] int32 sum,

[1] int32 val,

[2] int32[] CS$6$0000,

[3] int32 CS$7$0001

)

IL_0000: ldc.i4.0

IL_0001: stloc.0

IL_0002: ldarg.0

IL_0003: stloc.2

IL_0004: ldc.i4.0

IL_0005: stloc.3

IL_0006: br.s IL_0014

// loop start (head: IL_0014)

IL_0008: ldloc.2

IL_0009: ldloc.3

IL_000a: ldelem.i4

IL_000b: stloc.1

IL_000c: ldloc.0

IL_000d: ldloc.1

IL_000e: add

IL_000f: stloc.0

IL_0010: ldloc.3

IL_0011: ldc.i4.1

IL_0012: add

IL_0013: stloc.3

IL_0014: ldloc.3

IL_0015: ldloc.2

IL_0016: ldlen

IL_0017: conv.i4

IL_0018: blt.s IL_0008

// end loop

IL_001a: ldloc.0

IL_001b: ret

} // end of method Program::GetSumForeach

Compare that to the IL from the baseline loop. The only difference is the presence of some additional, generated local variables to store the array and index variable. Otherwise, it looks exactly like a loop. And, in fact, the assembly code also looks exactly the same (including elimination of the bounds check):

00007FFADFCB01F0 mov rdx,rcx

00007FFADFCB01F3 xor ecx,ecx

00007FFADFCB01F5 mov r10,qword ptr [rdx+8]

00007FFADFCB01F9 test r10d,r10d

00007FFADFCB01FC jle 00007FFADFCB0223

00007FFADFCB01FE mov r8d,ecx

00007FFADFCB0201 xor r9d,r9d

00007FFADFCB0204 nop word ptr [rax+rax]

00007FFADFCB0210 mov eax,dword ptr [rdx+r9+10h]

00007FFADFCB0215 add ecx,eax

00007FFADFCB0217 inc r8d

00007FFADFCB021A add r9,4

00007FFADFCB021E cmp r8d,r10d

00007FFADFCB0221 jl 00007FFADFCB0210

00007FFADFCB0223 mov eax,ecx

00007FFADFCB0225 ret

For loop on List<T>

Before seeing foreach operate on List<T>, we should see what for looks like:

private static int GetSumFor(List<int> array)

{

int sum = 0;

for (int i = 0; i < array.Count; i++)

{

sum += array[i];

}

return sum;

}

And the corresponding IL:

.method private hidebysig static

int32 GetSumFor (

class [mscorlib]System.Collections.Generic.List`1 'array'

) cil managed

{

// Method begins at RVA 0x20a0

// Code size 31 (0x1f)

.maxstack 3

.locals init (

[0] int32 sum,

[1] int32 i

)

IL_0000: ldc.i4.0

IL_0001: stloc.0

IL_0002: ldc.i4.0

IL_0003: stloc.1

IL_0004: br.s IL_0014

// loop start (head: IL_0014)

IL_0006: ldloc.0

IL_0007: ldarg.0

IL_0008: ldloc.1

IL_0009: callvirt instance !0 class [mscorlib]System.Collections.Generic.List`1::get_Item(int32)

IL_000e: add

IL_000f: stloc.0

IL_0010: ldloc.1

IL_0011: ldc.i4.1

IL_0012: add

IL_0013: stloc.1

IL_0014: ldloc.1

IL_0015: ldarg.0

IL_0016: callvirt instance int32 class [mscorlib]System.Collections.Generic.List`1::get_Count()

IL_001b: blt.s IL_0006

// end loop

IL_001d: ldloc.0

IL_001e: ret

} // end of method Program::GetSumFor

It looks pretty much like the for loop on an array, but with some method calls instead of direct memory accesses to retrieve the values and the length (Count).

However, the assembly code generated from this is more interesting and shows some subtle and important differences:

00007FFADFCD0BE0 push rbx // Stack frame setup

00007FFADFCD0BE1 push rsi

00007FFADFCD0BE2 push rdi

00007FFADFCD0BE3 sub rsp,20h

00007FFADFCD0BE7 mov rdx,rcx // Copy array address to rdx

00007FFADFCD0BEA xor ecx,ecx // Set i to 0. i is used only for the loop condition.

00007FFADFCD0BEC xor r11d,r11d // Set j to 0. j is used as the iterator on the internal array inside List

00007FFADFCD0BEF mov r8d,ecx // Set sum to 0

00007FFADFCD0BF2 mov ebx,dword ptr [rdx+18h] // Copy length to ebx

// LOOP START

00007FFADFCD0BF5 cmp ecx,ebx // Compare i to length

00007FFADFCD0BF7 jl 00007FFADFCD0C00 // if less, jump below

00007FFADFCD0BF9 mov eax,r8d // else copy sum to return value

00007FFADFCD0BFC jmp 00007FFADFCD0C26 // jump to end of function

00007FFADFCD0BFE xchg ax,ax // No-op

00007FFADFCD0C00 mov eax,dword ptr [rdx+18h] // Copy list's length to eax

00007FFADFCD0C03 cmp ecx,eax // Compare i to length

00007FFADFCD0C05 jae 00007FFADFCD0C35 // if greater than or equal, jump and throw exception

00007FFADFCD0C07 mov r9,qword ptr [rdx+8] // Copy List's array address to r9

00007FFADFCD0C0B movsxd r10,r11d // Copy j to r10 and sign extend.

00007FFADFCD0C0E mov rax,qword ptr [r9+8] // Copy array's length to rax

00007FFADFCD0C12 cmp r10,rax // Compare j to array' length

00007FFADFCD0C15 jae 00007FFADFCD0C30 // If greater than or equal, jump and throw exception

00007FFADFCD0C17 mov eax,dword ptr [r9+r10*4+10h] // copy array[j] (list[i]) to eax

00007FFADFCD0C1C add r8d,eax // sum += array[j]

00007FFADFCD0C1F inc ecx // i++

00007FFADFCD0C21 inc r11d // j++

00007FFADFCD0C24 jmp 00007FFADFCD0BF5 // Jump to beginning of loop

00007FFADFCD0C26 add rsp,20h // Clean up stack

00007FFADFCD0C2A pop rdi

00007FFADFCD0C2B pop rsi

00007FFADFCD0C2C pop rbx

00007FFADFCD0C2D ret // return

00007FFADFCD0C30 call 00007FFB3F7E6590 // Throw exception

00007FFADFCD0C35 mov ecx,0Dh

00007FFADFCD0C3A call 00007FFB3CD990F8 // Throw exception

Here’s what’s going on:

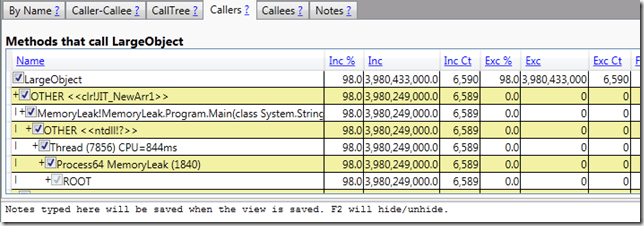

- The method calls on List<int> have been inlined away—we get direct memory access to the underlying array that List<int> maintains.

- There is an iteration on i that compares it to the length of the List<int>.

- There is a separate iteration on j (which I call j in the annotations), which compares it against the length of the internal array that List<int> wraps.

- Failing either iteration will cause an exception to be thrown.

The duplicate iterations don’t really cause the loop to be twice as slow as a pure array would be—there is still only one memory access and every thing else is operating on registers, which is extremely efficient. But if you have the choice between arrays and List<T>, well, it’s hard to beat an array for pure efficiency.

Foreach on List<T>

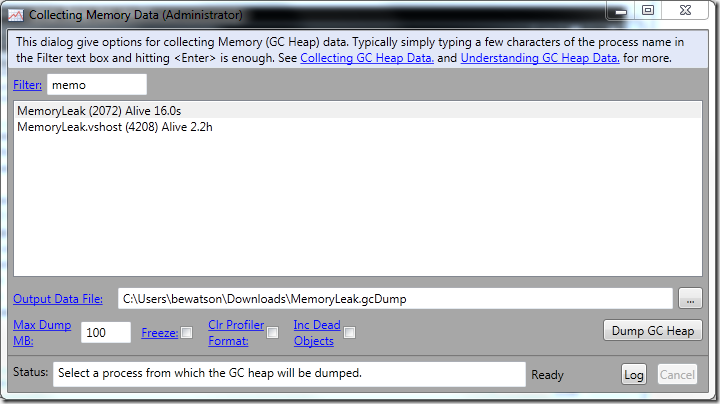

Finally, let’s turn our attention to foreach on List<T>. Will the compiler (or the JIT) turn this into a straightforward for loop?

No. Here’s the IL:

.method private hidebysig static

int32 GetSumForeach (

class [mscorlib]System.Collections.Generic.List`1 list

) cil managed

{

// Method begins at RVA 0x20f4

// Code size 50 (0x32)

.maxstack 2

.locals init (

[0] int32 sum,

[1] int32 val,

[2] valuetype [mscorlib]System.Collections.Generic.List`1/Enumerator CS$5$0000

)

IL_0000: ldc.i4.0

IL_0001: stloc.0

IL_0002: ldarg.0

IL_0003: callvirt instance valuetype [mscorlib]System.Collections.Generic.List`1/Enumerator< !0> class [mscorlib]System.Collections.Generic.List`1::GetEnumerator()

IL_0008: stloc.2

.try

{

IL_0009: br.s IL_0017

// loop start (head: IL_0017)

IL_000b: ldloca.s CS$5$0000

IL_000d: call instance !0 valuetype [mscorlib]System.Collections.Generic.List`1/Enumerator::get_Current()

IL_0012: stloc.1

IL_0013: ldloc.0

IL_0014: ldloc.1

IL_0015: add

IL_0016: stloc.0

IL_0017: ldloca.s CS$5$0000

IL_0019: call instance bool valuetype [mscorlib]System.Collections.Generic.List`1/Enumerator::MoveNext()

IL_001e: brtrue.s IL_000b

// end loop

IL_0020: leave.s IL_0030

} // end .try

finally

{

IL_0022: ldloca.s CS$5$0000

IL_0024: constrained. valuetype [mscorlib]System.Collections.Generic.List`1/Enumerator

IL_002a: callvirt instance void [mscorlib]System.IDisposable::Dispose()

IL_002f: endfinally

} // end handler

IL_0030: ldloc.0

IL_0031: ret

} // end of method Program::GetSumForeach

I won’t show the assembly code for this, but suffice it to say, it’s also quite different.

Foreach on (IEnumerable<T>)array

What would you expect to happen with code like this?

private static int GetSumForeach(int[] array)

{

var collection = array as IEnumerable<int>;

int sum = 0;

foreach (var val in collection)

{

sum += val;

}

return sum;

}

In this case, the array optimization will be lost and you’ll get the same thing you did with foreach over List<T>.

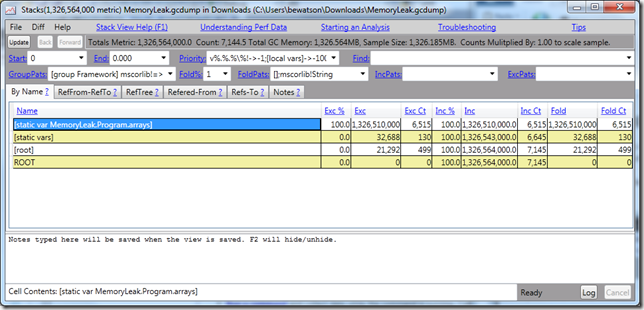

Loop Unrolling

Loop unrolling is the practice of reducing the overhead of a loop by causing fewer iterations, but more work per iteration. For loop-heavy code, it can make a big difference.

You can do this unrolling yourself, such as with the following example:

private static int GetSum(int[] array)

{

int sum = 0;

for (int i = 0; i < array.Length-4; i+=4)

{

sum += array[i];

sum += array[i+1];

sum += array[i+2];

sum += array[i+3];

}

return sum;

}

In some cases, the compiler can do this kind of optimization for you, but for loop unrolling to take place, there is one big constraint that must be obeyed: The loop constraints must be statically determinable so that the compiler can know how to modify the loop.

This is why we don’t see any loop unrolling in the assembly code for any of our previous examples. The length of the loop was always variable. What if, instead, we have something like this:

const int ArrayLength = 100;

private static int GetSum(int[] array)

{

int sum = 0;

for (int i = 0; i < ArrayLength; i++)

{

sum += array[i];

}

return sum;

}

The IL for this method looks about the same as before, but the assembly shows the optimization:

00007FFADFCE01C0 sub rsp,28h // Setup stack frame

00007FFADFCE01C4 mov rdx,rcx // Copy array address to rdx

00007FFADFCE01C7 xor ecx,ecx // Set sum to 0

00007FFADFCE01C9 xor r8d,r8d // Set address offset to 0

00007FFADFCE01CC mov rax,qword ptr [rdx+8]// Copy array length to rax

00007FFADFCE01D0 mov r9d,60h // Copy value 96 to r9

00007FFADFCE01D6 cmp r9,rax // Compare 96 to length

00007FFADFCE01D9 jae 00007FFADFCE0230 // If greater than or equal, jump and throw exception

00007FFADFCE01DB mov r9d,61h // Copy value 97 to r9

00007FFADFCE01E1 cmp r9,rax // Compare to length

00007FFADFCE01E4 jae 00007FFADFCE0230 // If greater than or equal, jump and throw exception

00007FFADFCE01E6 mov r9d,62h // Copy value 98 to r9

00007FFADFCE01EC cmp r9,rax // Compare to length

00007FFADFCE01EF jae 00007FFADFCE0230 // If greater than or equal, jump and throw exception

00007FFADFCE01F1 mov r9d,63h // Copy value 99 to r9

00007FFADFCE01F7 cmp r9,rax // Compare to length

00007FFADFCE01FA jae 00007FFADFCE0230 // If greater than or equal, jump and throw exception

00007FFADFCE01FC nop dword ptr [rax] // Nop

// LOOP START

00007FFADFCE0200 mov eax,dword ptr [rdx+r8+10h] // Copy array[i] to eax

00007FFADFCE0205 add ecx,eax //sum += array[i]

00007FFADFCE0207 mov eax,dword ptr [rdx+r8+14h] // Copy array[i+1] to eax

00007FFADFCE020C add ecx,eax // sum += array[i+1]

00007FFADFCE020E mov eax,dword ptr [rdx+r8+18h] // Copy array[i+2] to eax

00007FFADFCE0213 add ecx,eax // sum += array[i+2]

00007FFADFCE0215 mov eax,dword ptr [rdx+r8+1Ch] // Copy array[i+3] to eax

00007FFADFCE021A add ecx,eax // sum += array[i+3]

00007FFADFCE021C add r8,10h // Increment address offset by 16 bytes (4 integers)

00007FFADFCE0220 cmp r8,190h // Compare address offset to 400

00007FFADFCE0227 jl 00007FFADFCE0200 // If less, loop around

00007FFADFCE0229 mov eax,ecx // Copy sum to return value

00007FFADFCE022B add rsp,28h // Fix up stack

00007FFADFCE022F ret // return

00007FFADFCE0230 call 00007FFB3F7E6590 // throw exception

The basic operation of this is:

- Check that we can safely do increments of N values and overflow the array (N being 4 in this specific case).

- Do N operations per loop body.

As soon as you deviate from the static determinism, however, you will not be able to rely on this optimization. However, if you have special knowledge of your arrays (such as that their length is always a multiple of 4), then you can do the loop unrolling yourself in the source code.

As far as the current JIT compiler goes, you will lose this optimization if you change the array size in this example to 101. Some compilers will split this–an unrolled loop, with the leftovers tacked onto the end. The JIT compiler doesn’t do this at this time (remember, it’s under a severe time constraint).

The ultimate loop unrolling of course is just eliminating the loop altogether and listing out every operation individually. For small operations, this could be advisable–it’s just a tradeoff between code maintenance and performance that you have to make.

Summary

Understanding how small differences in code translate into more or less efficient machine code is important if you need to get the last small measure of performance on your more performance-critical components, especially if you are CPU bound.

Of all of the collections, arrays are the most efficient to access, but List<T> is very close. When iterating over arrays, you want to try to eliminate the bounds check if possible. When deciding between for and foreach, keep in mind that foreach can involve method calls for anything except pure arrays.

Understand the conditions that allow for loop unrolling. If you can formulate your code to allow it, and it makes a difference, go for it, or manually unroll loops if you get a performance gain out of it.

Most of all, figure out how to answer these types of questions for yourself so that the next time deep performance questions arise, you know how to quickly determine the answer.