It is with great pleasure that I announce the latest open source release from Microsoft. This time it’s coming from Bing.

Before explaining what it is and how it works, I have to mention that nearly all of the work for actually getting this setup on GitHub and preparing it for public release was done by by Chip Locke [Twitter | Blog], one of our superstars.

What It Is

Microsoft.IO.RecyclableMemoryStream is a MemoryStream replacement that offers superior behavior for performance-critical systems. In particular it is optimized to do the following:

- Eliminate Large Object Heap allocations by using pooled buffers

- Incur far fewer gen 2 GCs, and spend far less time paused due to GC

- Avoid memory leaks by having a bounded pool size

- Avoid memory fragmentation

- Provide excellent debuggability

- Provide metrics for performance tracking

In my book Writing High-Performance .NET Code, I had this anecdote:

In one application that suffered from too many LOH allocations, we discovered that if we pooled a single type of object, we could eliminate 99% of all problems with the LOH. This was MemoryStream, which we used for serialization and transmitting bits over the network. The actual implementation is more complex than just keeping a queue of MemoryStream objects because of the need to avoid fragmentation, but conceptually, that is exactly what it is. Every time a MemoryStream object was disposed, it was put back in the pool for reuse.

-Writing High-Performance .NET Code, p. 65

The exact code that I’m talking about is what is being released.

How It Works

Here are some more details about the features:

- A drop-in replacement for System.IO.MemoryStream. It has exactly the same semantics, as close as possible.

- Rather than pooling the streams themselves, the underlying buffers are pooled. This allows you to use the simple Dispose pattern to release the buffers back to the pool, as well as detect invalid usage patterns (such as reusing a stream after it’s been disposed).

- Completely thread-safe. That is, the MemoryManager is thread safe. Streams themselves are inherently NOT thread safe.

- Each stream can be tagged with an identifying string that is used in logging. This can help you find bugs and memory leaks in your code relating to incorrect pool use.

- Debug features like recording the call stack of the stream allocation to track down pool leaks

- Maximum free pool size to handle spikes in usage without using too much memory.

- Flexible and adjustable limits to the pooling algorithm.

- Metrics tracking and events so that you can see the impact on the system.

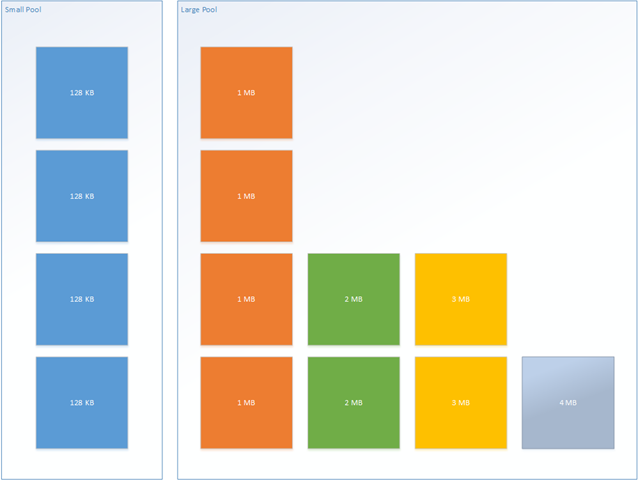

- Multiple internal pools: a default “small” buffer (default of 128 KB) and additional, “large” pools (default: in 1 MB chunks). The pools look kind of like this:

In normal operation, only the small pool is used. The stream abstracts away the use of multiple buffers for you. This makes the memory use extremely efficient (much better than MemoryStream’s default doubling of capacity).

The large pool is only used when you need a contiguous byte[] buffer, via a call to GetBuffer or (let’s hope not) ToArray. When this happens, the buffers belonging to the small pool are released and replaced with a single buffer at least as large as what was requested. The size of the objects in the large pool are completely configurable, but if a buffer greater than the maximum size is requested then one will be created (it just won’t be pooled upon Dispose).

Examples

You can jump right in with no fuss by just doing a simple replacement of MemoryStream with something like this:

var sourceBuffer = new byte[]{0,1,2,3,4,5,6,7};

var manager = new RecyclableMemoryStreamManager();

using (var stream = manager.GetStream())

{

stream.Write(sourceBuffer, 0, sourceBuffer.Length);

}

Note that RecyclableMemoryStreamManager should be declared once and it will live for the entire process–this is the pool. It is perfectly fine to use multiple pools if you desire.

To facilitate easier debugging, you can optionally provide a string tag, which serves as a human-readable identifier for the stream. In practice, I’ve usually used something like “ClassName.MethodName” for this, but it can be whatever you want. Each stream also has a GUID to provide absolute identity if needed, but the tag is usually sufficient.

using (var stream = manager.GetStream("Program.Main"))

{

stream.Write(sourceBuffer, 0, sourceBuffer.Length);

}

You can also provide an existing buffer. It’s important to note that this buffer will be copied into the pooled buffer:

var stream = manager.GetStream("Program.Main", sourceBuffer,

0, sourceBuffer.Length);

You can also change the parameters of the pool itself:

int blockSize = 1024;

int largeBufferMultiple = 1024 * 1024;

int maxBufferSize = 16 * largeBufferMultiple;

var manager = new RecyclableMemoryStreamManager(blockSize,

largeBufferMultiple,

maxBufferSize);

manager.GenerateCallStacks = true;

manager.AggressiveBufferReturn = true;

manager.MaximumFreeLargePoolBytes = maxBufferSize * 4;

manager.MaximumFreeSmallPoolBytes = 100 * blockSize;

Is this library for everybody? No, definitely not. This library was designed with some specific performance characteristics in mind. Most applications probably don’t need those. However, if they do, then this library can absolutely help reduce the impact of GC on your software.

Let us know what you think! If you find bugs or want to improve it in some way, then dive right into the code on GitHub.

Links

- Microsoft.IO.RecyclableMemoryStream on GitHub

- NuGet package

- Writing High-Performance .NET Code [Book that will explain the situations in which you may need something like this in a high amount of detail]

Pingback: Microsoft comienza a liberar parte del código fuente del buscador Bing | Libuntu, un novedoso blog sobre Distros, Linux y Software Libre

Pingback: ???? Bing ???????????????? | ????

How is RecyclableMemoryStreamManager supposed to be used? Do we recreate it each time we want access to the pool? Or do we keep the same instance for the life of the application?

If the latter, is it threadsafe or do we need to put a lock around it?

The manager should last the life of the process. And it is thread safe. I will edit the post to make this clearer.

You said I can name these buffers to ease debugging. How do I examine these names, and what am I looking for in them? Are there hooks to observe buffer allocations, perfmon counters, etc?

Are there any reasons I wouldn’t want to use this willy-nilly go forward?

The tag parameter supplied in the GetStream method will allow you to name the stream. In addition, each stream has a GUID associated with it. Both of these values are passed into the events that are fired for various operations, such as buffer allocation. You could then hook up perfmon or ETW counters as your particular application requires.

Pingback: Recent projects - Code For Life

Could this be something for ASP.NET Generated Image https://aspnet.codeplex.com/releases/view/16449 ? Very often see application problems due to fragmented LOH when processing image streams.

Pingback: The Morning Brew - Chris Alcock » The Morning Brew #1819

Can you give any examples of the types of applications that would benefit from this?

Any application that needs to do serialization/deserialization using MemoryStream or pure byte buffers. Anything that has a high rate or latency of Gen2 garbage collections due to large object heap allocations.

var stream=httpWebResponse.GetResponseStream();

Is it possible to make the http raw stream recycable?

I mean is: when the code does a http request. it gets a http response stream. the underlying stream is an unmanaged tcp bytes array. RecycableMemoryStream can only reuse the bytes array in managed code. So I think if I want to reuse the bytes array allocated by httprespone, it’s impossible to implement, am I right?

I don’t think it’s possible to pool those streams. If they’re unmanaged, then pooling won’t matter–unmanaged memory won’t contribute to GC.

Pingback: Geht Bing Open-Source?

I’d love to put

RecyclableMemoryStreamto use, but would love to have some sort of measurement in place before implementing so I can get an indication of whether there is anything to be gained by implementing it or not.Do you have a suggestion on a good measurement and a method for getting it in an automated and consistent way? I’d really prefer a unit test that could print (or even assert) something at the end. Is it possible to perform any sort of useful measurement in a programmatic way?

It might be difficult to measure this in a programmatic way. Really what you need to do is profile–Visual Studio, PerfView, or other memory profiles will tell you what’s going on, how many GCs you have, how long they are, where the memory allocations are. It’s technically possible to do this from within your program if you setup an ETW event listener and process those events yourself, but that’s a little heavy. Take a look at my book for more information on this. http://www.writinghighperf.net

My understanding of the Large Object Heap is that any object over 85k will be allocated on the LOH. The default block size for the small pool is 128k, yet it’s stated that the RecycableMemoryStream will “Eliminate Large Object Heap allocations by using pooled buffers”.

Am I missing something? Or is the phrasing of that statement meant to mean that it will prevent NEW Large Object Heap allocations by reusing the existing pool already created? Assuming that’s true, do you think there would be a benefit to using a smaller block size in the small pool so that the blocks are not placed on the LOH at all?

In a related question, while the allocation strategy clearly improves memory performance by reusing existing buffers, is it possible this could still cause LOH fragmentation because the blocks are not contiguous?

My questions are more for curiosity and clarity than anything else. The code itself is excellent. Thanks.

@Jacob, Yes the wording is probably a little off. Once the blocks are allocated, no further LOH allocations will happen. Once memory is going to stick around, it doesn’t matter whether it lives in the LOH or SOH–the lifetime will ensure it’s in Gen 2 either way. However, choosing the block size should be data-driven: what’s the 99.9th percentile length of the buffers you need, for example.

LOH fragmentation could still happen, but as long as overall LOH allocations are small in number, the uniform and large block size minimizes the risk. Also, these days, the CLR lets you compact the LOH anyway, so this is of slightly lesser importance (as long as you can afford to do the occasional compaction).

Hi,

i don’t know if this is the correct place but i face some problems with the RecyclableMemoryStream. I’am replacing the normal MemoryStream with RecyclableMemoryStream(from nuget) in a multi threaded service application. I create one instance of the RecyclableMemoryStreamManager:

private static RecyclableMemoryStreamManager Manager= new RecyclableMemoryStreamManager();

und using this instance in the whole application. To get a stream-object i calling GetStream on the Manager:

//Example:

byte[] buffer;

Image img= Bitmap.FromFile(PathToImage);

using (var stream = Manager.GetStream())

{

img.Save(stream, ImageFormat.Png);

buffer = stream.GetBuffer();

}

From time to time if i call stream.GetBuffer() or stream.Read(, , ) i get invalid/wrong data.

If i’m using a new instance of the RecyclableMemoryStreamManager on each GetStream call everything seems ok.

//Example:

byte[] buffer;

Image img= Bitmap.FromFile(PathToImage);

var Manager = new RecyclableMemoryStreamManager();

using (var stream = Manager.GetStream())

{

img.Save(stream, ImageFormat.Png);

buffer = stream.GetBuffer();

}

As i understand only one instance of the RecyclableMemoryStreamManager is needed? Do i something wrong?

Thanks

It’s probably better to have this discussion at GitHub, but I can try to answer here. Your sample code doesn’t include how you’re using the buffer, so how do you know it has invalid/wrong data?

The manager is able to be used on multiple threads.

I can think of two things that you are possibly not taking into account:

1. While the manager is thread safe, individual streams are not–are you using the same stream on multiple threads?

2. When you call GetBuffer(), you get the entire buffer, even though you may have only read a few bytes into it. The image size could be 40K, yet the default buffer size is 128K. In the code above, you are not tracking the length of the actual data in the buffer, which is required when you use pooled buffers.

Hi Ben,

my mistake. I have used GetBuffer() incorrectly. Tacking the length and all works as expected.

Hi, Thanks for putting this together, this is what we needed. When you say “Note that RecyclableMemoryStreamManager should be declared once and it will live for the entire process”

Does this mean in a Web API solution, this should be a static element? Otherwise, a new manager will be created for each request.

Thanks

Yep, exactly. Recreating the manager on every request would defeat the purpose.

I’m curious about why use the keyword ‘override’ for method Write instead of keyword ‘new’? The method Write of base class MemoryStream is not declared as virtual.

Write is inherited from Stream and is indeed virtual. (Note that there are multiple Write methods)

When profiling a long running process , I see around 100k live instances of RecycableMmeoryStream , holding around 200K byte[] . this seems strange to me , I did not allocate those many streams, and I’m using the using 🙂 statement around each new stream.

So any idea why this would happen?

Thanks,

Omer

Thanks for a great article! I have recently blogged about ArrayPool, which is a high performance pool of managed arrays. If anybody is interested in learning more, here is the link: http://adamsitnik.com/Array-Pool/

Check this out

https://github.com/aumcode/nfx/tree/master/Source/NFX/ApplicationModel/Pile

Great article Ben and very timely!

Would love to see newer articles on server GC covering the latest versions of the FW.

Is this a good choice for asp.net applications?

Should we create a static instance of manager in global.asax and re-use it Application wide?

That sounds reasonable to me. It’s perfectly fine to use this in ASP.Net

Hello,

I have recently used RecyclableMemoryStream in one of my projects using Visual Studio 2017 and Nuget Package manager.

It works fine on my local machine. But, TeamCity is not able to download this package automatically and compilation of this project fails with the below error:

error CS0234: The type or namespace name ‘IO’ does not exist in the namespace ‘Microsoft’ (are you missing an assembly reference?)

This is happening only for this package. Other packages that are installed via Nuget are successfully added in references as the option of auto download missing packages is on.

Could you please suggest me on this?

Sorry, I don’t know what the problem could be. I would follow up with TeamCity support.

Very useful. Thanks

Hi Ben, great work, thanks for sharing. I’ve put RecyclableMemoryStream to use as the backing store for creating instances of System.Io.Compression.ZipArchive served back out through the HttpResponse in a web app.

Do you think it’s possible to implement something similar that re-uses the buffers behind FileStream, or is the implementation of that tied to win32 stuff?

cheers

I’ve never looked into FileStream, so I don’t really know–should be easy to find out, though!

Does this library support .NET Core?

Is it ok to define RecyclableMemoryStreamManager in Startupwith with AddSingleton?

Pingback: OutOfMemoryException when send big file 500MB using FileStream ASPNET – inneka.com

yes