Star Trek, along with other science fiction futures, has given us many things, apart from a vision of humanity that is hopefully a little better than we prove to be, but also a taste of what technology can be like when it is integrated so fully into people’s lives that it’s nearly taken for granted.

The computer on the Enterprise is an interesting entity to think about. A crew member can ask it just about any question and it can give the desired answer. It doesn’t matter if the question is slightly vague, or depends on prior knowledge of the conversation. What phenomenal power! How does it work?

I can think of only two possibilities:

- It can read their minds

- It has been paying attention to their conversation, and thus understands the context.

Not discounting the possibility of the first scenario, I want to think about the second.

Context

How much understanding of our immediate environment is due to context? When analyzing a situation we have at our disposal our

- Experience

- Book knowledge

- Logical analysis/intuition (I include them in the same since intuition could theoretically be a subconscious logical process, colored with experience—I don’t know if I believe that, but it’s not important)

Now take away experience. How would you fare when confronted with new situations (Which, by definition, are all situations)?

Most of us, I think, would understandably quail under the rigor of thought required to get through such an ordeal. If you believe otherwise, make the situation extreme—flying a plane, or leading a squad into war. No amount of knowledge or rational thought will help here—you need the benefit of hard-core training: experience–context.

Do this exercise: describe to someone what salt tastes like.

On the other hand, saying “It’s too salty.” immediately conveys exactly what you mean, based on shared context, mutual experience.

There is an enormous gap between where our computer systems are now, versus what is perhaps the holy grail of foreseeable technology, the computer on the Enterprise—an all-seeing, all-knowing, conversant entity. It’s like Wikipedia, but to a depth of knowledge unheard of on any web site today, all cross-referenced and Searchable.

Wikipedia is a decent (I won’t say great) source of much knowledge, but it’s hardly definitive, or all-encompassing. Also, it’s just facts. It’s not calculation or interpretation. It does not advise or synthesize.

In Star Trek, when a crewmember asks questions, they can be these fact-based, context-free questions that require simple look-ups to respond with. But often, there is a series of questions, with dialogue in-between, all related to a certain topic. Each query does not contain the total information required to retrieve a response. Rather, the computer has tracked the context and maintained an accurate representation of the conversation thus far. In essence, the computer is participating in the conversation fully.

An idea of what context means is demonstrated by this simple list of questions. Just imagine giving these to a computer or search engine today. The first one is ok, but following that, not so much.

- What are the latest Hubble Telescope pictures?

- When were these taken?

- How much longer will it stay up?

- How will the next space telescope be different?

- Compare the efforts of all G7 nations to build orbiting observation platforms.

Each of those questions presupposed the previous one. The computer must keep track of this. That last one is a real doozy—it’s asking the computer to synthesize information from multiple sources into a coherent, original response. We can’t even dream of something this advanced right now, but I believe it’s coming.

On the other hand, let’s take a different direction, more personal:

- Which of my friends are having a birthday in the next month?

- What book should I read next?

- What do I need to get at the store?

- Where are my children?

Is this possible to do today? Yes, technologically speaking.

It’s not technology that will hold us back. It’s us.

Security, Privacy

Think of what it means to have a computer able to access full context to answer any query you throw at it. It has to know everything about you. To give you good food recommendations, it has to know where you’ve eaten and how you liked it. To be able to answer arbitrary questions in context, it needs to record your every conversation, parse it, cross-reference it, and store it for later access.

In our current culture, what this means is tying together all systems. There are intimations of this happening. Every time you hear of a company providing an API to access its data, that’s a little piece of this context being hooked up. It means that the Computer now has access to your Facebook and LinkedIn data, so that when you search for “tortoise” it can see you’re a developer and a high-proportion of software developers want to actually download “TortoiseSVN”, not see pictures of reptiles. In fact, it probably means there is no such thing as Facebook (or any other social network) anymore. There is just one network filled with data.

It becomes even more intertwined. If I really want the computer to have full context of me, it should monitor what I watch on TV, what my tastes in music are, where I go, where I work, my habits, who I call, what I talk about, etc., etc., etc. It never ends.

Now, here’s the million-dollar question: who would agree to such invasive procedures, even if the benefit was enormous?

In many ways, we are agreeing to it all the time. We allow places like Amazon, Netflix, and iTunes to track all our purchases in order to give us decent recommendations (in the hope we’ll purchase more). We give up our privacy a bit when we get the grocery loyalty cards, or even credit cards. This is all tracked and correlated. In the case of recommendation systems, there is a tangible benefit for us,but the loyalty cards are less certainly valuable to us, other than lower prices (which is not an inherent benefit of those cards, just a marketing tactic). Indeed, studies have shown for just how little we humans give up our privacy.

There are a few levels of security we need to worry about. At a low level, how much do you trust Google, or Microsoft, or Apple, or Amazon with your information? Right now, a lot of us trust them with a fair amount, but nowhere near our entire life’s story. We have it neatly segmented. Part in Amazon, part in Facebook, part in Google, part in all the other companies we deal with. If mistakes are made, consequences not thought through, we have problems like all our friends seeing what we’re purchasing.

At a high level, we need to consider all of this context ending up in the wrong hands. Not just scammers and other low-lifes, but government, foreign and domestic. The potential for abuse is massive—so much so that most of us wouldn’t voluntarily agree to any of the unifying ideas in this essay in our lifetimes. We just don’t trust anybody that much.

In essence, a Star Trek-like computer would require massive amounts of “spyware” on every system in the world, all tied together in a massive database. This is possible (maybe even desired?) in a closed system like a ship, where everything is easily monitored and hierarchies of security are well-understood. In the world at large, it’s just scary.

Economy and Altruism

I believe another obstacle to this is money. The way our society works, with limited resources, we are required (?) to have some system of trade, an economy. These days, the trade is often over information, the very thing this mythical Star Trek computer depends on. Think credit reports, buying history, demographics.

What is the specific danger of businesses finding out personal information about you? Can they force you to buy something? Not likely. But they can manipulate the environment in such a way to make it more likely. They present a lie designed to sell you something you don’t need. More maliciously, they can also sell your information to more vital entities, like insurance companies, or governments. If the government is too powerful, there is no way to prevent this. Think about what happens in China.

Is the only way to have such efficient and helpful systems to do away with our current capitalistic economy? Yes and no. Such far-reaching, life-changing technologies will undoubtedly continue to be developed and become more a part of our lives than they already are. Unfortunately, the potential for abuse is enormous and will grow as we become more and more dependent on them. We have no inherent trust in the system, nor should we. Just look at the ridiculous politicking taking place over voting machines. That’s just one system, and our society can’t get it right. We have a thousand such systems, many hanging onto usefulness and security by a thread. I bet that it’s not even the exception, but the rule to have such systems. Why should we trust such things to run our lives? We shouldn’t. There are so many reasons for this: corruption, economy, politics, and motivation.

Perhaps motivation is they key. We are often motivated now by money, comfort, or some other selfish reason—reasonable or not. In the Star Trek vision of the future, we see a population depicted as motivated by a quest for knowledge and understanding. That’s why they can have all-knowing computers. They trust who created it and what it does. They know there is no political or other ulterior motive. Yes, there’s adequate security and protections against attack, but the whole starting mindset is different.

Don’t think that I’m in favor of destroying capitalism in favor of more socialistic or idealistic systems. Imposing a system of “fairness” or “equality” does nothing to further those goals and I’m not advocating any political or economic system—I’m merely stating what I think the reality must be in the future for us to make these advancements. People themselves must reform their motivations. Pushing any political system has no effect because the fundamentals of our world haven’t changed. Resources are still scarce, thus economy must still exist. If people’s intrinsic motivations are to be changed, I believe resources must be (practically) infinite.

When this happens the nature of the Internet will change as well. If the economics change and we are no longer concerned with that, and we also have an altruistic frame of mind, information that is posted on the Internet will similarly change. No longer do we have to actually care about our walled gardens—the information is just put “up there”, in the “cloud”, to use the popular term. A computer would be free to just quote the contents to the user, or recombine it with other content. It’s all just content, with a single interface to access it all.

Understanding

There’s an important issue I glossed over in the above paragraphs. That is understanding. I talked a little about this in my previous blog entry about Software Creativity and Strange Loops.

I’m excited for this future. I doubt I’ll live to see advances fully along these lines. The problems are phenomenally difficult and they’re not all technical, but it’s still exciting to think about. Those of us who can just need to do our small part to contribute towards it.

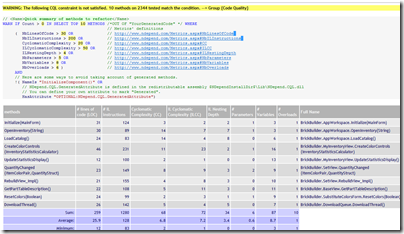

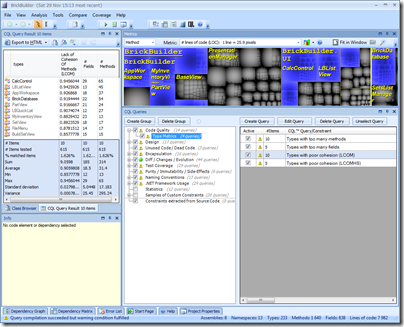

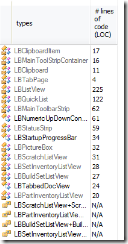

That’s it! You can see the results to the right. It’s ridiculously easy to create your own queries to examine nearly any aspect of your code. And that’s if the hundreds of included queries don’t do it for you. In many ways, the queries are similar to the analysis FxCop does, but I think CQL seems generally more powerful (while lacking some of the cool things FxCop has).

That’s it! You can see the results to the right. It’s ridiculously easy to create your own queries to examine nearly any aspect of your code. And that’s if the hundreds of included queries don’t do it for you. In many ways, the queries are similar to the analysis FxCop does, but I think CQL seems generally more powerful (while lacking some of the cool things FxCop has).